Are facemasks a problem for Voice Biometrics?

Contents:

The effect of facemasks on Voice Biometrics: an experiment

Wearing a mask is now the primary, everyday way to limit the spread of coronavirus, and has been reported to reduce the daily growth rate of reported infections by around 45% in large scale populations.

Face coverings have been mandatory on public transport since June 15th in the UK, and became mandatory in Germany in public in early April. In these two countries, they are the most familiar index of ‘the new normal’ in culture.This raises a potential problem for voice biometric security.

People’s behaviours adapt to change and these new behaviours then become normalised. Because of this, there is now a greater demand for contactless forms of verification and identification, especially as, alongside mask wearing, contactless identification and verification becomes associated with the benefits of public health. Gloves block the success of fingerprinting; masks hinder facial recognition. This blog will attempt to find out whether mask wearing affects the ability of voice biometric identification and verification to function properly.

A (very) brief overview of Voice Biometrics

Voice biometrics is the next leading edge technology, facilitating high levels of security and unlocking ease of access. It allows us to use our voices to navigate our everyday interactions with the digital world around us. A voiceprint, the key, is created either through the user repeating a passphrase, or passively through conversation with customer agents. When a user wishes to access a service with a voice biometric system, all they need to do is speak, and the voice biometric AI algorithm decides whether or not the sound of their voice in that moment matches the voiceprint on file, as a key to a lock. As some examples, voice biometrics is currently used in multi-layer authentication for logging into accounts with valuable information such as online banking. It is used in healthcare to provide better diagnosis of patients, and is perhaps most widely deployed in customer facing applications such as call centres, in IVR, and for mobile applications.

The problem masks could cause for Voice Biometrics

Face coverings come in all sizes and thicknesses and can impact a wearer’s speech patterns, by distorting the sound of their speech or by greatly attenuating it. The masks that are most effective, which create a tighter seal around the mouth and nose, would be expected to have more of an acoustic effect on speech sounds, and therefore could possibly affect the verification process - as the natural sound of the user’s voice will have been changed.

Another possible problem comes from the fact that the way that a person speaks through a mask is also likely to change. It may be the case that users wearing masks tend to speak more clearly than without one, as they may be self-conscious of the fact that they might not be heard properly, thus changing the sound being analysed on a more fundamental level.

Overall, it's important that the convenience of Voice Biometrics should not be hampered by the user wearing a mask. The simple question therefore is: are Voice Biometric algorithms affected by the user wearing a mask, and if so, how can the algorithm adapt to take into account mask-wearing users?

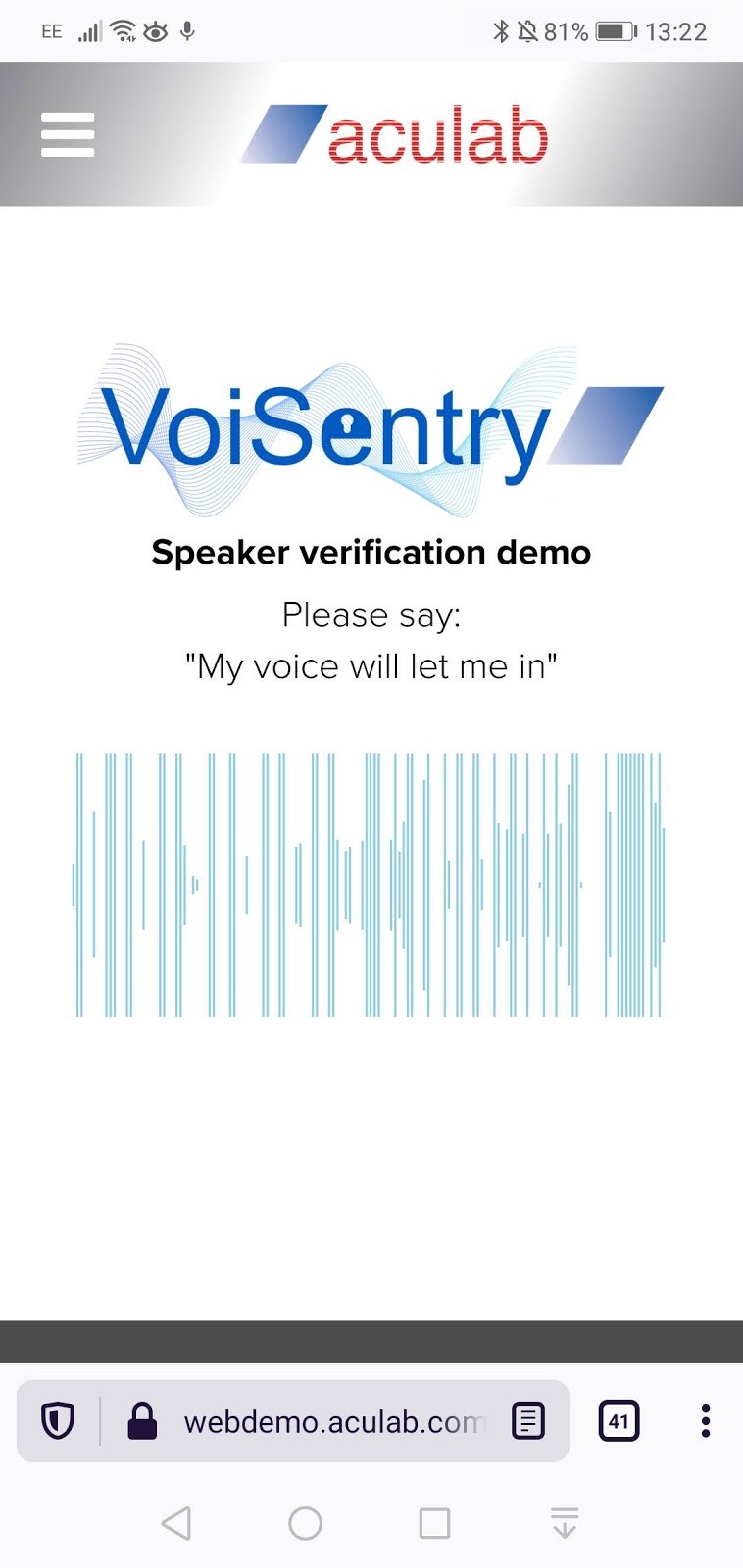

To understand this problem, we’ve put Aculab’s VoiSentry algorithm to the test. A voiceprint was created without a mask. Four different styles of face covering were then worn, one after the other, and access was attempted.

The face coverings being used for the test

#1: Filtering Facepiece (FFP3)

Heavy duty mask, very similar to the N95 mask, which is also of the PPE class FFP.

#2: Fabric Mask

This mask is made of a light, stretchy fabric. Some light fabric masks, however, are tighter, and wrap around the mouth and nose fully.

#3: Disposable Surgical Mask

One of the most commonly available masks, this is made out of a light fabric and creates as much of a seal around the mouth and nose as it can with a small strip of pliable plastic that bends to follow the contours of your face.

#4: Woolen Scarf

Not officially a mask, but a face covering. This also works as a stand-in for face-coverings and veils.

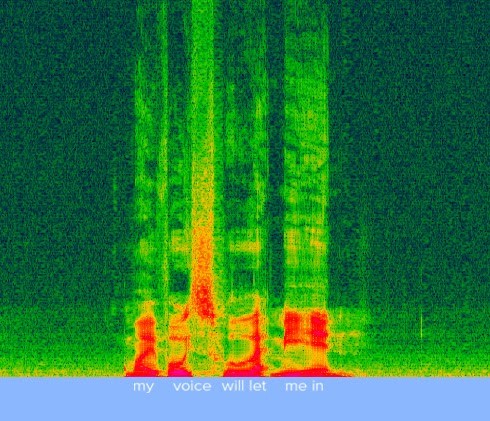

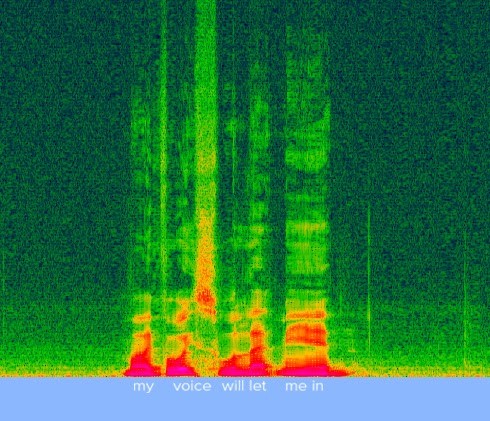

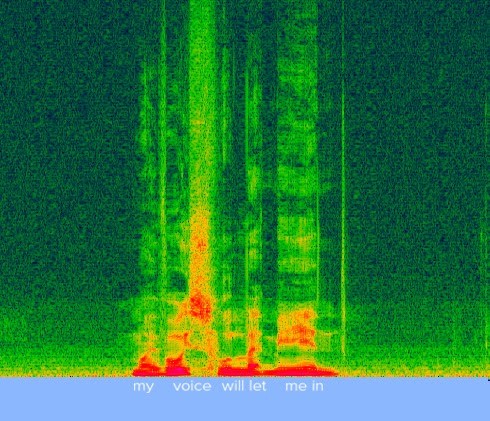

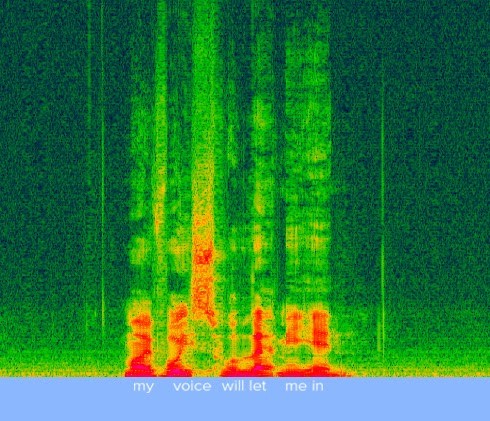

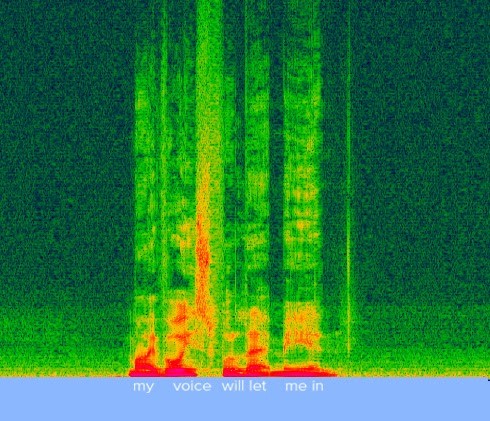

Initial Spectrogram Tests

This spectrogram was taken through recording a voice, repeating the passphrase “my voice will let me in”, which is needed to create a voiceprint with VoiSentry. The audio was recorded using a phone to emulate the most common microphone type, in a small, quiet room. The audio files were then fed into an audio processing program and through a spectrogram plugin. The passphrase was repeated whilst wearing different masks, and was repeated as close as the same intonation, speed and diction as possible to the control test, with the same distance to the microphone and in the same position in the room, to maximise the potential of the only variable being the swapping in and out of face coverings.

|

|

|

|

|

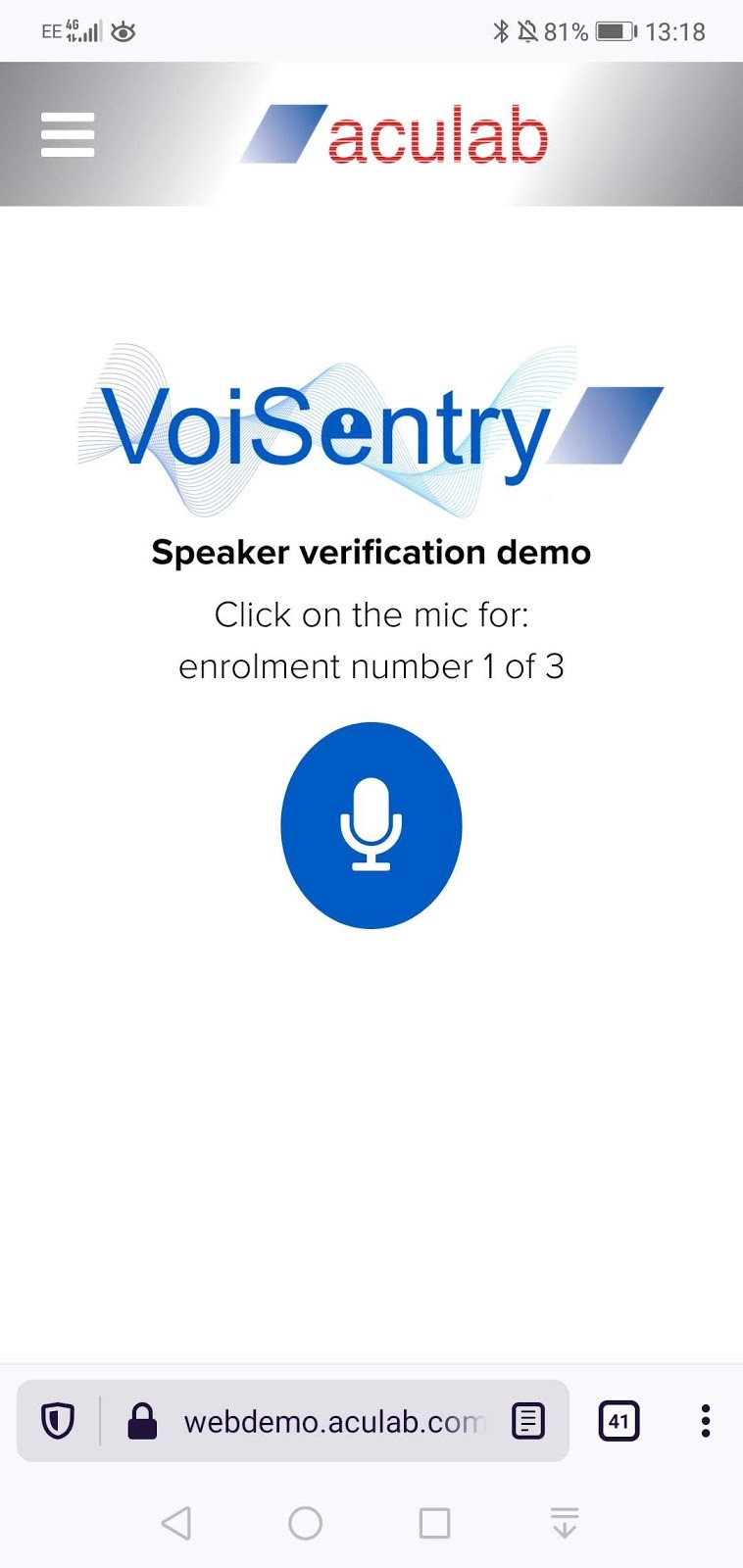

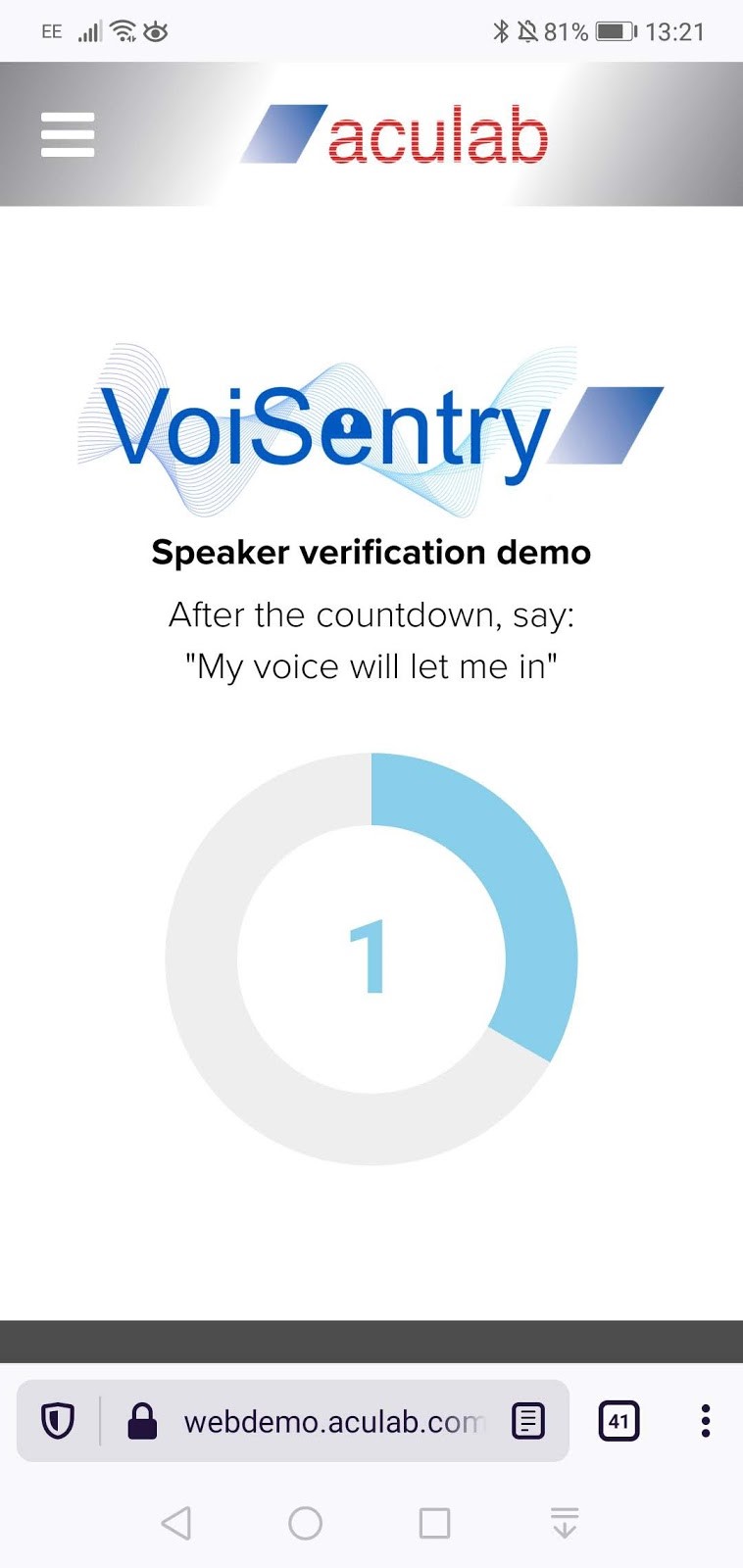

VoiSentry Tests

VoiSentry enrolment and initial verification of the voiceprint occurred using a mobile phone, and with no mask. Three verification attempts were made against this initial enrolment for each face covering. Distance to microphone, diction and other variables kept the same, with some organic variability allowed.

|

|

RESULTS

#1: Filtering Facepiece (FFP3)

|

#2: Fabric Mask

|

#3: Disposable Surgical Mask

|

#4: Woolen Scarf

|

Conclusions

These tests show that VoiSentry doesn't seem to be affected by the presence of masks.

Tests were also done with deliberate attempts to modulate the voice, and the way the passphrase was spoken, to test the presentation attack detection, or imposter detection. This worked well with all masks, showing that VoiSentry can still confirm a speaker's identity, even when wearing a protective mask.

What’s more, even if a genuine speaker is rejected, all that is needed is a simple update of their model to regain correct operation. Where re-enrolment is needed (which did not happen in these tests), VoiSentry provides a simple mechanism to update its voiceprints in cases such as this, to take into account mask wearing.

The efficiency and efficacy of VoiSentry lies in the fact that the algorithm has been designed to detect and verify users in noisy environments, and to take into account differences in acoustic properties, that may come from room reverberation, background noise, and distance from the microphone.

Acoustic properties may also be affected by the hardware that is picking up the signal, which may have built-in enhancements, such as gain control, dynamic equalisation, noise suppression, and other automatic signal processing. This is quite common on mobile phones.

Therefore, wearing a mask can have little to no impact on the verification and authentication process, meaning that contactless forms of verification and identification can continue to be deployed to great effect. This enables companies, products and services to gain a competitive edge in customer service situations where voice channels are available, and where the identity of the caller is critical to a business model.

Public perception of the effect of face coverings on a person's speech is somewhat exaggerated because human perception of speech is very much linked to visual perception - being able to see a speaker’s mouth move as well as discern body language, and subtle changes in facial movements all goes towards facilitating easy communication.

Fortunately for Voice Biometrics, algorithms and their performance, that is to say, matching a voiceprint to a user’s voice, operate on a highly attuned mathematical level - rather than psychological or psychoacoustic- and are designed to emulate human perception, and perhaps even improve upon it.

What is interesting is that although we can infer differences visually using a spectrogram to claim a difference in sound properties, the VoiSentry algorithm uses thousands of mathematical frames that operate over and above this dimension of our intuition. Indeed, this is the primary benefit, and some would say, magic of using an AI algorithm- it just works.